In the world of cloud storage, understanding the distribution of file sizes can be crucial for optimizing performance and costs. For users of Amazon S3, there is no built-in tool to quickly visualize this distribution. However, with a simple Bash script, we can generate a histogram that provides insights into the size categories of the objects stored within an S3 bucket.

The Script

The script takes a single argument, which is the name of the S3 bucket you wish to analyze. By using a combination of AWS CLI commands and AWK, the script outputs a simple text-based histogram representing the distribution of object sizes within the specified bucket.

#!/usr/bin/env bash

set -e

bucket_name="$1"

aws s3api list-objects-v2 --bucket "$bucket_name" --query 'Contents[].Size' --output text | tr '\t' '\n' | \

awk '{

if ($1 >= 0 && $1 < 1024) bin["0-1KB"]++;

else if ($1 < 10240) bin["1KB-10KB"]++;

else if ($1 < 102400) bin["10KB-100KB"]++;

else if ($1 < 1024000) bin["100KB-1MB"]++;

else if ($1 < 10240000) bin["1MB-10MB"]++;

else if ($1 < 102400000) bin["10MB-100MB"]++;

else bin["100MB+"]++;

}

END {

for (b in bin) {

print b ": " bin[b]

}

}'How It Works

1. Input: The script expects the bucket name as its first argument.

2. Fetch Object Sizes: It uses the AWS CLI’s `list-objects-v2` command to fetch the sizes of all objects in the bucket.

3. Text Processing: The output is piped through `tr` to replace tabs with newlines, creating a list where each size is on its own line.

4. AWK Script: The sizes are then piped into an AWK script, which sorts them into predefined size bins. Each size is checked against a series of if-else conditions, and a counter for the appropriate size bin is incremented.

5. Output: Once all sizes have been categorized, the AWK script prints the bin labels and counts in no particular order.

Example Output and Interpretation

The example output of the script is straightforward:

0–1KB: 2

10KB-100KB: 112

100KB-1MB: 109

1KB-10KB: 1

1MB-10MB: 12This output tells us that the bucket contains:

- Two objects of size between 0 and 1KB.

- One object of size between 1KB and 10KB.

- 112 objects of size between 10KB and 100KB.

- 109 objects of size between 100KB and 1MB.

- Twelve objects of size between 1MB and 10MB.

No objects larger than 10MB were found in this particular case.

Use Cases

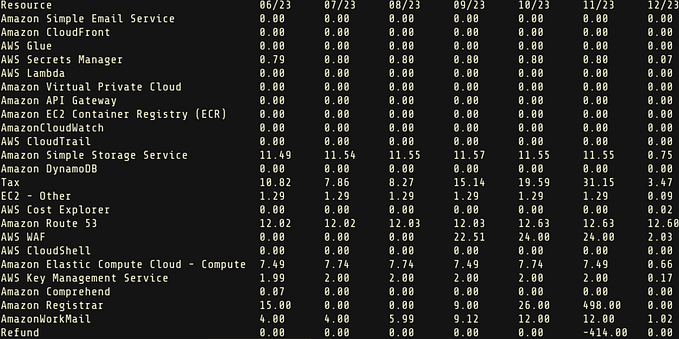

This histogram can be particularly useful for:

- Cost Analysis: Larger files may cost more to access or transfer, depending on your S3 pricing tier.

- Performance Optimization: Knowing the size distribution can help in optimizing performance, as larger files may take longer to process or transfer.

- Storage Management: Identifying the distribution can aid in storage lifecycle policies, such as moving rarely accessed large files to cheaper storage classes.

Conclusion

With a simple Bash script, S3 users can gain valuable insights into their storage patterns, enabling better decision-making regarding cost, performance, and storage management. The histogram is a powerful visualization tool, even in its text-based form, for quick and actionable analytics.